What rocket engineering can teach us about software engineering—and work in general

- Get link

- X

- Other Apps

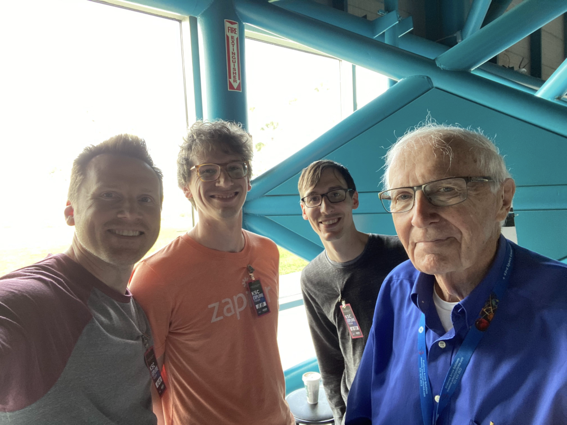

Our first Zapier company retreat of 2020 was in Orlando, which meant I got to spend my free day at the Kennedy Space Center. I went there to look at some big rockets—which I definitely did—but our co-founder Bryan and I quickly started geeking out on all the connections we saw to software engineering. So I'm going to try to tell you how we can all act more like rocket scientists.

If you want a big rocket, try going to the moon

By the time we arrived at the Saturn V, a rocket intended to reach the moon, we had already seen and heard some impressive things. But that massive rocket stopped us in sheer awe. Every problem we face as software engineers seems insignificant compared to building a rocket like that and sending it to a tiny speck of a moon over two hundred thousand miles away.

I spent a couple days thinking about what an impossible mission it was to get to the moon, and how something like that could be successful—while comparatively benign software projects can fail.

But there's one thing that project had that a lot of ours don't have: a clear and tangible goal. The goal was incredibly specific: get to the moon. (It was somewhat implied that they needed to get back, but that was a stretch goal.) The goal wasn't numerical, to reach some arbitrary speed or distance. It wasn't vague, like going somewhere in the solar system. It was to set two human feet on the lunar surface.

The goal was incredibly aggressive, but not so aggressive that it was destined to fail. At the time Kennedy announced the goal in 1961, the U.S. was behind in the Space Race. Six years later, Apollo 1 failed with a cabin fire that killed the entire crew. But the goal was set, and two years later, there was a man on the moon.

Engineering (and product) goals are often notoriously vague. We want to "improve onboarding," or we want to "make our product easier to use." In an effort to make it less vague, we often make it arbitrary. "Double the rate of activation" or "cut the setup time in half." While we're at it, we often make the deadlines arbitrary too and just stick "by the end of the year" on the end.

As a result, our goals aren't always exciting and tangible like getting to the moon, and they probably aren't realistic either. Chances are there are specific problems standing in the way of onboarding or making the product hard to use. Those problems may be huge, but as long as they're specific, why not solve those instead?

If it works, that's all that matters

So we have a goal: get to the moon. But there are a few smaller steps before that. One of them: get your massive rocket from the assembly building to the launchpad.

The solution: drive it there on a massive vehicle, of course. But what happens when you realize you need to drive it across a paved road, and your rocket and transporter are so massive that they will literally crush the road? Break out the taxpayer checkbook for some really complex solution? Repave the road? Nope—just throw down some boring old plywood to distribute the load.

Billions of dollars worth of sophisticated rocket science, and it's shuffled around on cheap, everyday plywood. As our tour guide put it, "if it works, that's all that matters."

As software engineers, how many times are we trying to build rockets when we could just be laying down plywood? We're writing custom code when there's already something off-the-shelf that does what we need. We're choosing the perfect auto-scaling database solution when a traditional database would do just fine. We're reaching for that new web framework to build our simple website.

Other times we do the opposite and use plywood where we should be building a rocket. We keep pushing off building that automated CI pipeline. We're asking engineers to manually seed their database for testing instead of creating a tool for it. We want to improve API performance, but we're not measuring it.

The point isn't that you should never build a rocket. The point is to use the sophisticated solutions for the problems that demand it.

We gotta go with what we know

This same lesson of pragmatism where you separate the plywood solutions from the rocket science came up again when we talked to Lee Solid, the highlight of our highlighted trip.

As the sign said, Mr. Solid is a retired NASA engineer.

He oversaw the teams that built the rocket's engines. And it was fascinating hearing his stories. You could easily imagine him being interviewed for a documentary, but we got to hear it firsthand and ask all the follow-up questions.

One thing he repeated was that things were different back then. He said there were no focus groups. No committees. They basically took direction from Wernher von Braun—the director of NASA's Marshall Space Flight Center—and nobody else. To illustrate, he pointed to the end of the rocket and said, "You see those fins?" We expected some scientific explanation to follow, but instead, he said, "You don't need those fins. Von Braun just liked fins."

Would it be better to just remove the fins if they're unnecessary? Probably. But they had other, bigger problems to deal with, and unnecessary fins weren't something worth worrying about. Lee said that von Braun always said, "We gotta go with what we know." There was no time for debating anything that didn't merit debate. They had to get to the moon.

Before working for NASA, Wernher von Braun was a rocket engineer for Nazi Germany. While we acknowledge his involvement in the space program in this article, we want to be explicit that we condemn his history as a member of the SS.

Of course, this is a tough lesson to get right. We don't want to shut down good ideas. But we do want to focus our good ideas where they count. How often are we debating something that doesn't really matter? Debating rocket colors when we should be building rocket engines? Discussing rocket science when a plywood solution works just fine? Deciding how big to make the rocket when we already know we want to go to the moon?

A lot of the same plywood and rockets examples apply here. If we know how to use a traditional database, and it will work just fine for our project, let's go with what we know. If we aren't having trouble with our web framework or finding engineers to work with it, let's go with what we know. But if we know our customers are having trouble with our product, let's start experimenting with our interface. If we think an animation might explain things better, let's try that. If we know it's taking engineers hours of frustration to deploy changes, it might be time to think outside our box.

Move fast and document things

When you hear Lee Solid talk about how there were no focus groups or committees, you might get the feeling that they didn't follow a lot of processes. This is super weird because when people talk about a slow, methodical project, they often compare it to launching a rocket. "We're not launching a rocket here" is something I've heard (and maybe said) many times.

Well, apparently, launching a rocket isn't even like launching a rocket.

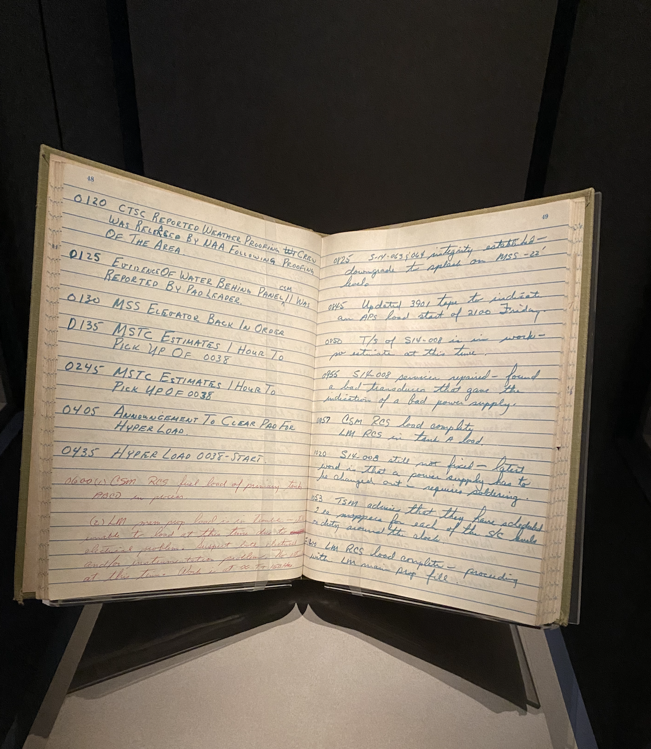

I'm sure there's more nuance to uncover here. I wish we'd had a whole day to talk about process with Lee because I bet we'd have learned a lot of significant details. This logbook I later came across gave me one clue though:

The placard underneath (which I didn't think to photograph at the time but dug it up on Google) read:

Meeting President Kennedy's 1969 deadline for a Moon Landing called for a development and testing program that moved at breakneck speed. The smallest details were meticulously documented for reference and review in handwritten journals like this one.

If we think of doing something at a breakneck pace, the last thing we imagine is carefully documenting everything. But it makes sense. If you're burning billions of dollars and putting lives at risk while the world watches, the last thing you want to do is repeat the same mistakes. When something like the Apollo 1 fire happens, you sure want to be able to dig through every detail that led to that failure.

This also matches up to a snippet from "Lee Solid's Oral History" that I found:

And I guess I was taught early on that we are in a high risk business, a tough business. You know when you have a failure you learn as much about it as you can and treat it as a learning experience.

If you're doing something particularly risky, you think of doing everything you can to avoid failure. But it's almost the opposite. Sure, you do what you can to mitigate the risk, but you don't shy away from failure. You embrace it. You learn from it. And you're only going to learn from it if you've recorded enough data to learn.

Fast also doesn't have to mean stupid. There were thirteen unmanned test launches even before Apollo 1, using smaller Saturn I and Saturn IB rockets. Apollo 11 was preceded by three missions that reached the moon. That breakneck speed included an iterative learning process to fill all those logbooks. Without that care and attention to detail, we would never have made it to the moon—much less six times.

As software engineers, we need to ask ourselves: Are we really embracing failure? Or are we trying to avoid it? Are we documenting all the necessary information in order to learn? Are we moving fast and taking risks but still acting within reason, taking the appropriate iterative steps to have a safe launch?

If you want to avoid an explosion, you have to cause some explosions

Bryan asked Lee about his biggest challenge while leading teams to build the rocket engines, and he jumped right to one that he said threatened to stop the whole Apollo program: the combustion instability problem.

The short version: They had the unfortunate issue of engines occasionally blowing up. Which is a problem when you're trying to keep the people on top of the rocket alive. Apparently, burning 5,000 pounds of fuel per second is a little bit difficult to control. The slightest variation of fuel flow can increase thrust which raises pressure which restricts the fuel supply which reduces thrust which decreases pressure which increases fuel flow… and you can see where this is going.

The cycle, as Lee described it, causes shockwaves that begin to interact and sometimes lead to a catastrophic explosion, essentially ripping the entire engine to pieces.

Thousands (yes, thousands!) of engineers worked on solving this problem. The solution was to add baffles (or dividers) to effectively create smaller streams of exhaust. This smoothed out the overall fuel flow and damped down the resulting shockwaves so they wouldn't combine into destructive forces. This seemed to work, but unfortunately, because the interactions were basically random events, how could they be sure the solution worked? By making their own explosions. They used small explosive devices to force pressure changes, and the baffles proved to negate any catastrophic effects.

Some of this is familiar to software engineering. How do we defend against security threats? By encouraging them with our bounty program. How do we determine if a particular database will handle the load for a particular application? Drown it in requests so we know the breaking point. It's often a matter of course that we recreate the conditions of a bug in order to make sure it's fixed.

But we often forget to blow things up and instead deliver happy path solutions. We test with fast machines with fast internet connections. We expect our customers to click buttons in a particular order. We expect all responses to be 200 OK. Why not start blowing things up? Introduce latency and error responses. Add tests where buttons are clicked out of order.

If we know something might fail, we need to cause the failure ourselves so we're ready for it. Because…

If it can go wrong, it will go wrong

The Apollo 1 disaster threatened to kill the Apollo program before it got off the ground (literally). But the Apollo 11 mission was also threatened at the last minute—by a computer error that nobody bothered preparing for. Because nobody thought it was even possible. No simulations had surfaced the error. The live dress rehearsal of Apollo 10, which practiced a partial descent, didn't surface the error either. But the problem was there from the start, just waiting for the right conditions.

As soon as Neil Armstrong and Buzz Aldrin began their descent, their guidance computer started flashing a 1202 alarm. This alarm meant the computer was overloaded with instructions, and it was forced to reset. This alarm was never supposed to occur because the system was designed to never receive more instructions than it could handle.

Unfortunately, human error had led to a configuration of switches being designed in such a way to allow this obscure state. When a radar was accidentally turned on, it started flooding the computer with unnecessary instructions.

Luckily, the computer was also designed in such a way that it could reset but not actually shut down. It ignored low priority instructions while acknowledging high priority instructions. (Take that, modern computers.) So Neil Armstrong was still able to manually pilot the lunar module to the surface.

There are a few different alternate histories here. If the computer had been made a little less resilient and fully shut down, Apollo 11 never would have landed, or much worse, it would have crashed. Ideally, of course, they would have caught the design error and fixed it. Or they could have forced the condition and prepared for it. In any case, Murphy's law is always in effect.

Are we preparing ourselves for inevitable failures like this? Or are we pretending that some errors are "impossible"? Some of the same lessons about blowing things up apply here. As Kent Beck said, "optimism is an occupational hazard of programming." You may be tempted to move that card to the "done" column and ship it, but are you sure your tests cover the weird scenarios? You may have heard about Netflix's Chaos Monkey that randomly terminates production machines to ensure that developers create resilient services. Maybe we should consider adding that kind of chaos into our own products? Zapier allows users to build powerful workflows…and sometimes accidentally create powerful problems. Maybe we should create a Zapier chaos monkey that creates weird Zaps so we're forced to build a more resilient workflow engine? What similar things can you do for your products?

Go build a rocket as long as you know where you're going, and use plenty of plywood and other stuff you know when that's what works. Whatever you build, it will explode, so blow it up yourself first, and document what happened so you can learn from those explosions.

Hero image by Bill Jelen on Unsplash.

from The Zapier Blog https://ift.tt/38t4GaG

- Get link

- X

- Other Apps

Comments

Post a Comment